Background and Issue

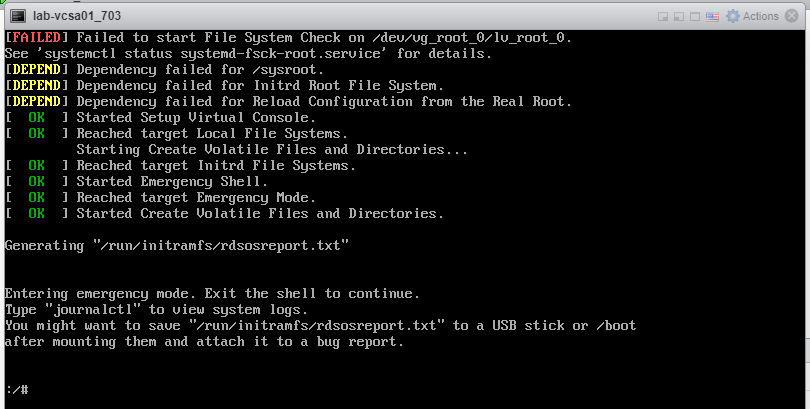

Last night I had a networking / storage blip in my home lab which caused my VMware hosts to lose connectivity to the datastores hosted on my NAS. When I signed in my VMs were still powered on, but clearly not functioning in any capacity (as expect) Much to my dismay, when I finally resolved the network issue, rescanned the storage, and rebooted my VMs, vCenter failed to start and presented the below error:[FAILED] Failed to start File System Check on /dev/vg_root_0/lv_root_0

See 'systemctl status systemd-fsck-root.service' for details

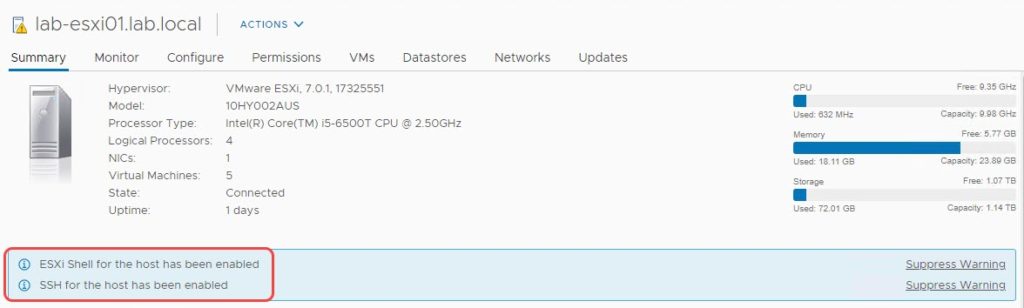

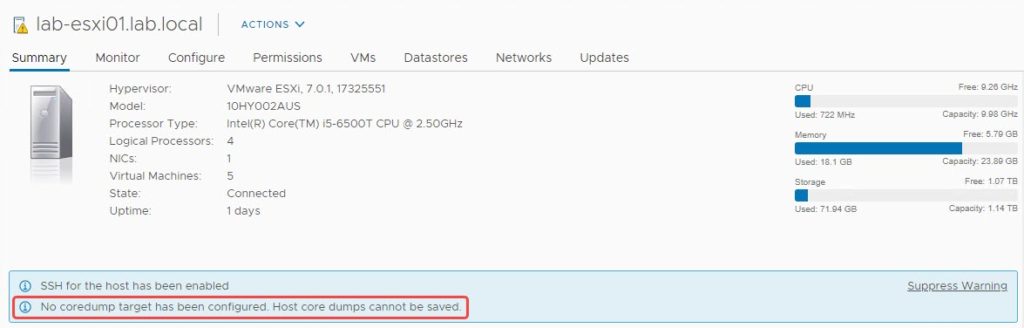

Troubleshooting ‘vCenter Failed to Start’

This error is common after losing access to storage, and there’s a fairly well-known KB from VMware about it. https://kb.vmware.com/s/article/2149838. The article doesn’t go into the amount of detail I’d like to see, so I decided this would be a perfect time to do a full write-up on my experience! That’s what blogs are for, right?

NOTE BEFORE BEGINNING:

If your vCenter failed to start, I highly recommend shutting down the vCenter and taking a snapshot! The KB article doesn’t mention this, but it is best practice. Always make sure to take a powered down snapshot in production before doing any kind of changes through the CLI that involve filesystem modifications. If you’re doing this in a lab, I still recommend it so that you can attempt this fix multiple times. I ran through this fix several times to make sure I had this process solidified and consistent.

- Reboot VCSA and enter Emergency Mode

- In my case for vCenter 7, it automatically boots into Emergency Mode and drops you into a CLI prompt where you can run commands.

- If you do not see “Entering emergency mode.” With a prompt after it (as seen in the above screenshot), then you’ll need to follow their process to boot into it.

- Find the filesystems by running commands

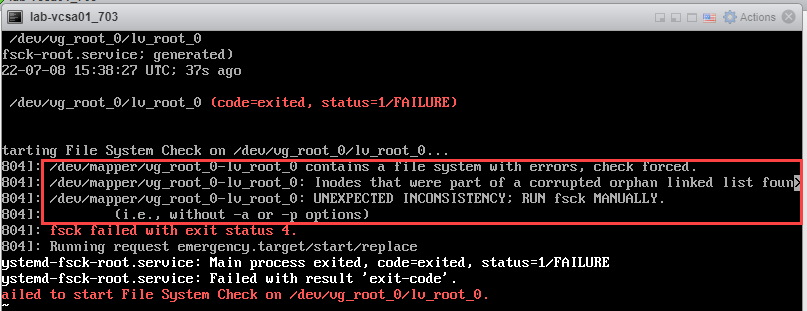

- Start with this step! In the initial message, it says to run the following command for more details on this error:

systemctl status systemd-fsck-root.service

That command returns the following where we can see the above entry in the FSC failure, confirming we see errors on /dev/mapper/vg_root_0-lv_root_0, which is mapped /dev/vg_root_0/lv_root_0.

- The KB article recommends running the following command:

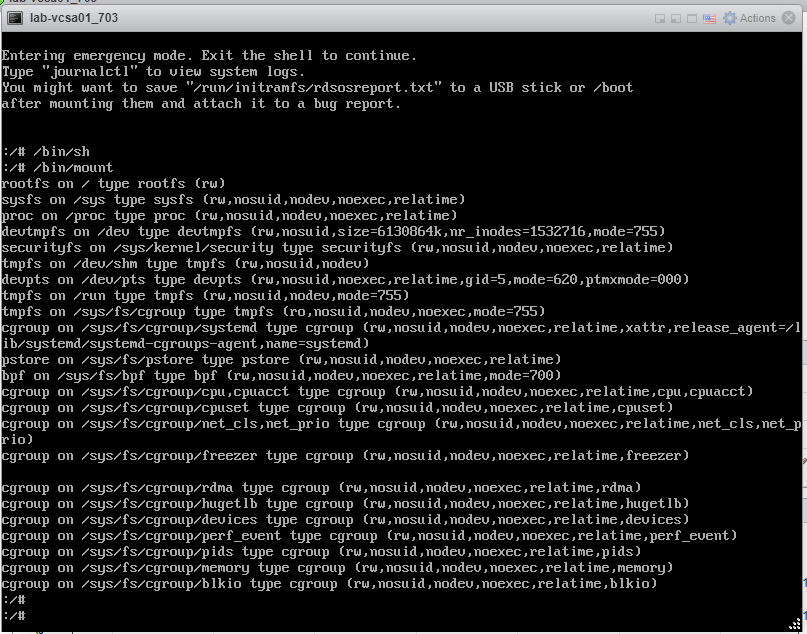

/bin/sh

This returned nothing for me, but the result depends on the specific error that you see. - The KB article also recommends checking the mount directory using this command:

/bin/mount

For me, this returned the results in the screenshot below, but nothing matched /dev/vg_root_0/lv_root_0 that the error complained about.

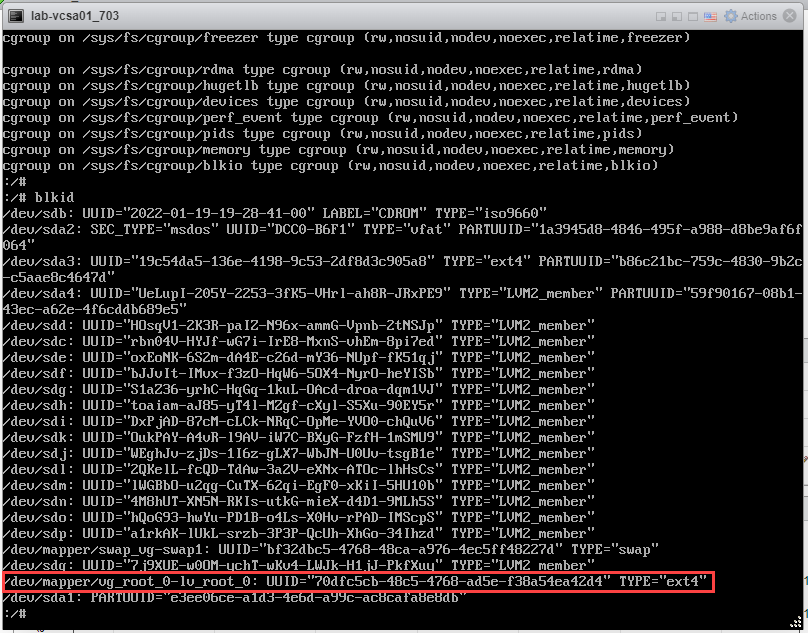

- The KB then has you check the mapping for a valid filesystem using this command:

Blkid

As you can see below, the results show that /dev/mapper/vg_root_0-lv_root_0 does contain a valid EXT4 filesystem. That’s good news for us, it means that the sectors haven’t been corrupted and we can likely repair it.

- Start with this step! In the initial message, it says to run the following command for more details on this error:

- Fix the disk using e2fsck

- To fix this, we need to use the command that the that VMware provided in the KBA. Make sure to swap out the value for /dev/mapper/vg_root_0-lv_root_0 that we found earlier. You’ll need to replace this with the mapping found in step 2.1. The command I used was:

e2fsck -y /dev/mapper/vg_root_0-lv_root_0 - You will see that several files are fixed during this process. It’s similar to using dism or sfc in Windows to check files.

- To fix this, we need to use the command that the that VMware provided in the KBA. Make sure to swap out the value for /dev/mapper/vg_root_0-lv_root_0 that we found earlier. You’ll need to replace this with the mapping found in step 2.1. The command I used was:

- Once the file check completes, perform a graceful reboot by entering reboot and the VCSA. The appliance should restart and begin to boot normally. Please remember that it generally takes anywhere 5-15 minutes for all of the VCSA services to come up. This is a perfect time to grab a cup of coffee!

- Since you definitely took a snapshot at the beginning *stares intently, knowing you followed best practices* don’t forget to delete it after you’re 100% certain your vCenter is healthy! In production, a call to support isn’t a bad idea just o have them double check.

Despite the official KB saying that this “vCenter failed to start” error is fairly common, this is actually the first time I’ve seen or heard of this issue. I’ve been working with VMware for several years in over 50+ customer environments. I suppose it’s just been good luck, but hopefully this guide helps someone, somewhere. That wraps it up for this post, so remember guys: Break it ’till you make it, never test in production!

Thank you, this saved me after a power outage.

Thank you. Had this exact issue, your explanation came up at the first search result. I had the same exact errors you showed. I ran the same commands you shared, and everything now appears to be up and running again.

Thank you a lot

Legend! Thanks for helping me out get production back on 😀

Thank you thank you thank you!! Lifesaver!

Add one more happy customer to the list! Saved me from rebuilding my VCSA from scratch. Excellent, concise article. Thank you!

Thank you! It restored my vcsa to normal.

Please to delete or ignore my previous message. I stand corrected. It worked!

I shouldn’t have misjudged so quickly because I tried something similar twice before and those failed. I am humbled.

This definitely worked like a charm and you have my 100% gratitude 🙏🏽

No worries, I’m glad this helped you out! I know the frustration of trying to get something working, sometimes you just need to step away and come back to it. It happens to me all the time!!

Thanks for write up! Your article got straight to the point and fixed my vCenter Server Appliance after a network switch replacement interrupted traffic between the VCSA and the NAS.

That’s exactly what happened to me and why I wrote out this article! I’m glad it helped you!

Worked like charm, nicely written.

thank you

Worked for me

I share Valentin’s sentiment. I found the KB article first and it was no use. Your summary saved me considerable time and pain,

Thank you, u saved me a lot of time